Enhancing the receptive field of the model is important for effective 3D medical image segmentation. Traditional convolutional neural networks (CNNs) often struggle to obtain global information from high-resolution 3D medical images. One proposed solution is to utilize depthwise convolution with a larger kernel size to capture a wider range of features. However, CNN-based approaches require assistance in understanding the relationships between distant pixels.

Recently, there has been extensive exploration of transformer architectures that leverage self-attention mechanisms to extract global information for 3D medical image segmentation, such as trans-BTS. TransBTS combines 3D-CNN and transformers to capture both local spatial features and global dependencies in high-level features. ; UNETR employs Vision Transformer (ViT) as an encoder to learn context information. However, trans-based methods often face computational challenges due to the high resolution of 3D medical images, leading to poor speed performance.

To address the problem of long sequence modeling, researchers previously introduced Mamba, a state-space model (SSM), to efficiently model long-range dependencies through selection mechanisms and hardware-aware algorithms. . Various studies have applied Mamba to computer vision (CV) tasks. For example, U-Mamba integrates his Mamba layers to improve segmentation of medical images.

At the same time, Vision Mamba proposes a Vim block that incorporates bidirectional SSM for global visual context modeling and location embedding for location awareness. VMamba also introduces a CSM module to bridge the gap between one-dimensional array scanning and two-dimensional plane traversal. However, traditional transformation blocks face challenges in handling large-sized features and the need to model correlations within high-dimensional features to enhance visual understanding.

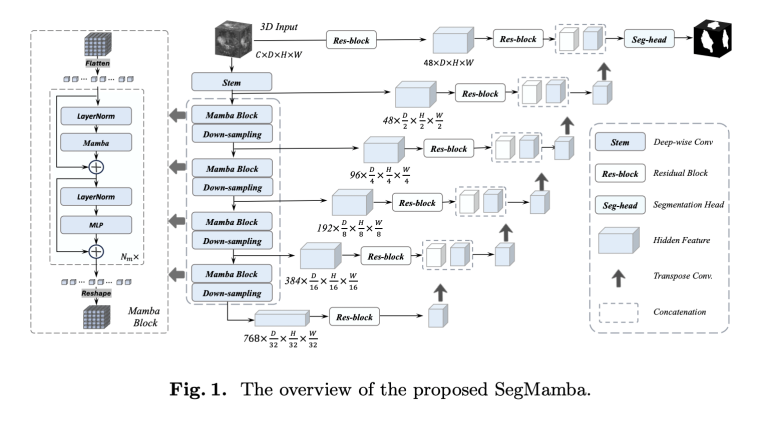

Inspired by this, researchers from the Beijing Academy of Artificial Intelligence introduced SegMamba, a new architecture that combines U-shaped structures and Mamba, to model global features across volumes at different scales. They specifically utilize his Mamba for segmentation of 3D medical images. SegMamba demonstrates superior capabilities in modeling long-range dependencies in volumetric data while maintaining superior inference efficiency compared to traditional CNN-based and transformer-based methods.

The researchers conducted extensive experiments on the BraTS2023 dataset to confirm the effectiveness and efficiency of SegMamba in 3D medical image segmentation tasks. Unlike Transformer-based methods, SegMamba utilizes state-space modeling principles to excel at modeling features across volumes while maintaining good processing speed. Even with volume capabilities of 64 × 64 × 64 resolution (corresponding to approximately 260k continuous length), SegMamba shows remarkable efficiency.

Please check paper and GitHub. All credit for this study goes to the researchers of this project.Don't forget to follow us twitter and google news.participate 36,000+ ML SubReddits, 41,000+ Facebook communities, Discord channeland linkedin groupsHmm.

If you like what we do, you'll love Newsletter..

Don't forget to join us telegram channel

Arshad is an intern at MarktechPost. He is currently continuing his international studies. He holds a master's degree in physics from the Indian Institute of Technology, Kharagpur. Understanding things from the fundamentals leads to new discoveries and advances in technology. He is passionate about leveraging tools such as mathematical models, ML models, and AI to fundamentally understand the essence.